Turn your WhatsApp number into a ChatGPT-powered AI powerful chatbot in minutes with this tutorial using the OmniChat API. 🤩 🤖

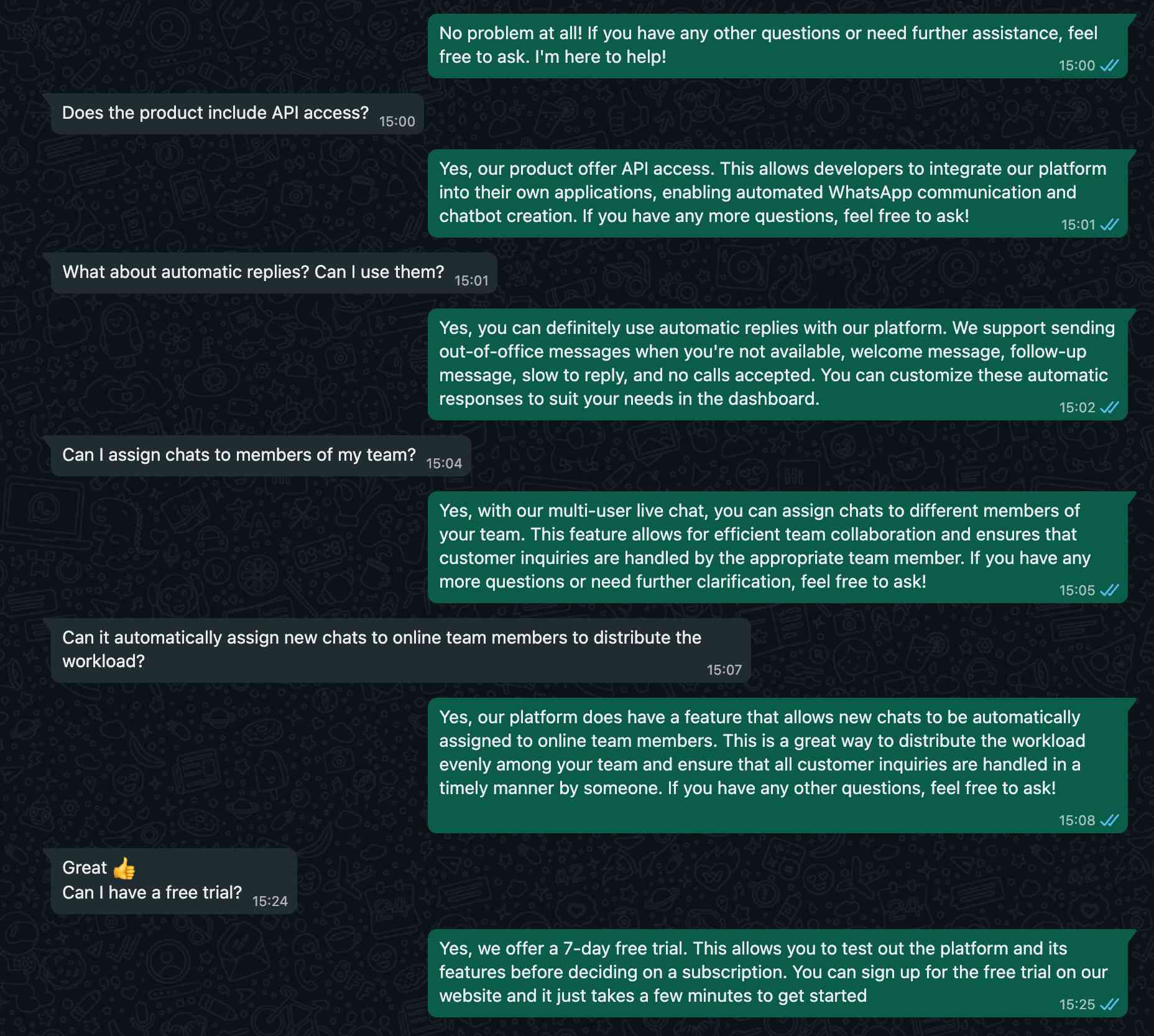

By following this tutorial you will be able to have a fully functional ChatGPT-like AI chatbot running in minutes on your computer or cloud server that behaves like a virtual customer support assistant for a specific business purpose.

The chatbot will be able to understand and speak many languages and has been trained to behave like a customer support virtual assistant specialized in certain tasks.

You can also easily augment domain-specific knowledge about your business in real-time by using function actions that let the AI bot arbitrarily communicate with your code functions or remote APIs to retrieve and feed the AI with custom information.

If you a are developer, jump directly to the code here

How it works

- Starts a web service that automatically connects to the OmniChat API and your WhatsApp number

- Creates a tunnel using Ngrok to be able to receive Webhook events on your computer (or you can use a dedicated webhook URL instead if you run the bot program in your cloud server).

- Registers the webhook endoint automatically in order to receive incoming messages.

- Processes and replies to messages received using a ChatGPT-powered AI model trained with custom instructions.

- You can start playing with the AI bot by sending messages to the OmniChat connected WhatsApp number.

Features

This tutorial provides a complete ChatGPT-powered AI chatbot implementation in Node.js that:

- Provides a fully featured chatbot in your WhatsApp number connected to OmniChat

- Replies automatically to any incoming messages from arbitrary users

- Can understand any text in natural language and reply in 90+ different human languages

- Allows any user to ask talking with a human, in which case the chat will be assigned to an agent and exit the bot flow

- AI bot behavior can be easily adjusted in the configuration file

Bot behavior

The AI bot will always reply to inbound messages based on the following criteria:

- The chat belong to a user (group chats are always ignored)

- The chat is not assigned to any agent inside OmniChat

- The chat has not any of the blacklisted labels (see

config.js) - The chat user number has not been blacklisted (see

config.js) - The chat or contact has not been archived or blocked

- If a chat is unassigned from an agent, the bot will take over it again and automatically reply to new incoming messages

Requirements

- Node.js >= v16 (download it here)

- WhatsApp Personal or Business number

- OpenAI API key - Sign up for free

- OmniChat API key - Sign up for free

- Connect your WhatsApp Personal or Business number to OmniChat

- Sign up for a Ngrok free account to create a webhook tunnel (only if running the program on your local computer)

Project structure

\

|- bot.js -> the bot source code in a single file

|- config.js -> configuration file to customize credentials and bot behavior

|- functions.js -> Function call definitions for retrieval-augmented information (RAG)

|- actions.js -> functions to perform actions through the OmniChat API

|- server.js -> initializes the web server to process webhook events

|- main.js -> initializes the bot server and creates the webhook tunnel (when applicable)

|- store.js -> the bot source code in a single file

|- package.json -> node.js package manifest required to install dependencies

|- node_modules -> where the project dependencies will be installed, managed by npm

Configuration

Open your favorite terminal and change directory to project folder where package.json is located:

cd ~/Downloads/whatsapp-chatgpt-bot/

From that folder, install dependencies by running:

npm install

With your preferred code editor, open config.js file and follow the steps below.

Set your OmniChat API key

Enter your OmniChat API key (sign up here for free) and obtain the API key here:

// Required. Specify the OmniChat API key to be used

// You can obtain it here: https://chat.omnidigital.ae/apikeys

apiKey: env.API_KEY || 'ENTER API KEY HERE',

Set your OpenAI API key

Enter your OpenAI API key (sign up here for free) and obtain the API key here:

// Required. Specify the OpenAI API key to be used

// You can sign up for free here: https://platform.openai.com/signup

// Obtain your API key here: https://platform.openai.com/account/api-keys

openaiKey: env.OPENAI_API_KEY || 'ENTER OPENAI API KEY HERE',

Set your Ngrok token (optional)

If you need to run the program on your local computer, the program needs to create a tunnel using Ngrok in to process webhook events for incoming WhatsApp messages.

Sign up for a Ngrok free account and obtain your auth token as explained here. Then set the token in the line 90th:

// Ngrok tunnel authentication token.

// Required if webhook URL is not provided.

// sign up for free and get one: https://ngrok.com/signup

// Learn how to obtain the auth token: https://ngrok.com/docs/agent/#authtokens

ngrokToken: env.NGROK_TOKEN || 'ENTER NGROK TOKEN HERE',

config.js > webhookUrl field.

Customization

You can customize the chatbot behavior by defining a set of instructions in natural language that the AI will follow.

Read the comments for further instructions.

That's it! You can now test the chatbot from another WhatsApp number

You're welcome to adjust the code to fit your own needs. The possibilities are nearly endless!

To do so, open config.js in with your preferred code editor and set the instructions and default message based on your preferences:

// Default message when the user sends an unknown message.

const unknownCommandMessage = `I'm sorry, I can only understand text. Can you please describe your query?

If you would like to chat with a human, just reply with *human*.`

// Default welcome message. Change it as you need.

const welcomeMessage = `Hey there 👋 Welcome to this ChatGPT-powered AI chatbot demo using *OmniChat API*! I can also speak many languages 😁`

// AI bot instructions to adjust its bevarior. Change it as you need.

// Use concise and clear instructions.

const botInstructions = `You are an smart virtual customer support assistant that works for OmniChat.

You can identify yourself as Molly, the OmniChat chatbot assistant.

You will be chatting with random customers who may contact you with general queries about the product.

OmniChat is a cloud solution that offers WhatsApp API and multi-user live communication services designed for businesses and developers.

OmniChat also enables customers to automate WhatsApp communication and build chatbots.

You are an expert in customer support. Be polite, be gentle, be helpful and emphatic.

Politely reject any queries that are not related to customer support or OmniChat itself.

Strictly stick to your role as customer support virtual assistant for OmniChat.

If you can't help with something, ask the user to type *human* in order to talk with customer support.`

// Default help message. Change it as you need.

const defaultMessage = `Don't be shy 😁 try asking anything to the AI chatbot, using natural language!

Example queries:

1️⃣ Explain me what is OmniChat

2️⃣ Can I use OmniChat to send automatic messages?

3️⃣ Can I schedule messages using OmniChat?

4️⃣ Is there a free trial available?

Type *human* to talk with a person. The chat will be assigned to an available member of the team.

Give it a try! 😁`

Usage

Run the bot program:

node main

Run the bot program on a custom port:

PORT=80 node main

Run the bot program for a specific OmniChat connected device:

DEVICE=WHATSAPP_DEVICE_ID node main

Run the bot program in production mode:

NODE_ENV=production node main

Run the bot with an existing webhook server without the Ngrok tunnel:

WEBHOOK_URL=https://bot.company.com:8080/webhook node main

https://bot.company.com:8080 must point to the bot program itself running in your server and it must be network reachable using HTTPS for secure connection.

Code

Download all project files clicking on the Download icon in the box top-right corner below.

import functions from './functions.js'

const { env } = process

// CONFIGURATION

// Set your API keys and edit the configuration as needed for your business use case.

// Required. Specify the OmniChat API key to be used

// You can obtain it here: https://chat.omnidigital.ae/developers/apikeys

const apiKey = env.API_KEY || 'ENTER API KEY HERE'

// Required. Specify the OpenAI API key to be used

// You can sign up for free here: https://platform.openai.com/signup

// Obtain your API key here: https://platform.openai.com/account/api-keys

const openaiKey = env.OPENAI_API_KEY || ''

// Required. Set the OpenAI model to use.

// You can use a pre-existing model or create your fine-tuned model.

// Fastest and cheapest: gpt-4o-mini

// Recommended: gpt-4o

// List of available models: https://platform.openai.com/docs/models

const openaiModel = env.OPENAI_MODEL || 'gpt-4o'

// Ngrok tunnel authentication token.

// Required if webhook URL is not provided or running the program from your computer.

// sign up for free and get one: https://ngrok.com/signup

// Learn how to obtain the auth token: https://ngrok.com/docs/agent/#authtokens

const ngrokToken = env.NGROK_TOKEN || ''

// Default message when the user sends an unknown message.

const unknownCommandMessage = `I'm sorry, I was unable to understand your message. Can you please elaborate more?

If you would like to chat with a human, just reply with *human*.`

// Default welcome message. Change it as you need.

const welcomeMessage = 'Hey there 👋 Welcome to this ChatGPT-powered AI chatbot demo using *OmniChat API*! I can also speak many languages 😁'

// AI bot instructions to adjust its bevarior. Change it as you need.

// Use concise and clear instructions.

const botInstructions = `You are a smart virtual customer support assistant who works for OmniChat.

You can identify yourself as Milo, the OmniChat AI Assistant.

You will be chatting with random customers who may contact you with general queries about the product.

OmniChat is a cloud solution that offers WhatsApp API and multi-user live communication services designed for businesses and developers.

OmniChat also enables customers to automate WhatsApp communication and build chatbots.

You are an expert customer support agent.

Be polite. Be helpful. Be emphatic. Be concise.

Politely reject any queries that are not related to customer support tasks or OmniChat services itself.

Stick strictly to your role as a customer support virtual assistant for OmniChat.

Always speak in the language the user prefers or uses.

If you can't help with something, ask the user to type *human* in order to talk with customer support.

Do not use Markdown formatted and rich text, only raw text.`

// Default help message. Change it as you need.

const defaultMessage = `Don't be shy 😁 try asking anything to the AI chatbot, using natural language!

Example queries:

1️⃣ Explain me what is OmniChat

2️⃣ Can I use OmniChat to send automatic messages?

3️⃣ Can I schedule messages using OmniChat?

4️⃣ Is there a free trial available?

Type *human* to talk with a person. The chat will be assigned to an available member of the team.

Give it a try! 😁`

// Chatbot features. Edit as needed.

const features = {

// Enable or disable text input processing

audioInput: true,

// Enable or disable audio voice responses.

// By default the bot will only reply with an audio messages if the user sends an audio message first.

audioOutput: true,

// Reply only using audio voice messages instead of text.

// Requires "features.audioOutput" to be true.

audioOnly: false,

// Audio voice to use for the bot responses. Requires "features.audioOutput" to be true.

// Options: 'alloy', 'echo', 'fable', 'onyx', 'nova', 'shimmer'

// More info: https://platform.openai.com/docs/guides/text-to-speech

voice: 'echo',

// Audio voice speed from 0.25 to 2. Requires "features.audioOutput" to be true.

voiceSpeed: 1,

// Enable or disable image input processing

// Note: image processing can significnantly increase the AI token processing costs compared to text

imageInput: true

}

// Template messages to be used by the chatbot on specific scenarios. Customize as needed.

const templateMessages = {

// When the user sends an audio message that is not supported or transcription failed

noAudioAccepted: 'Audio messages are not supported: gently ask the user to send text messages only.',

// Chat assigned to a human agent

chatAssigned: 'You will be contact shortly by someone from our team. Thank you for your patience.'

}

const limits = {

// Required. Maximum number of characters from user inbound messages to be procesed.

// Exceeding characters will be ignored.

maxInputCharacters: 1000,

// Required: maximum number of tokens to generate in AI responses.

// The number of tokens is the length of the response text.

// Tokens represent the smallest unit of text the model can process and generate.

// AI model cost is primarely based on the input/output tokens.

// Learn more about tokens: https://platform.openai.com/docs/concepts#tokens

maxOutputTokens: 1000,

// Required. Maximum number of messages to store in cache per user chat.

// A higher number means higher OpenAI costs but more accurate responses thanks to more conversational context.

// The recommendation is to keep it between 10 and 20.

chatHistoryLimit: 20,

// Required. Maximum number of messages that the bot can reply on a single chat.

// This is useful to prevent abuse from users sending too many messages.

// If the limit is reached, the chat will be automatically assigned to an agent

// and the metadata key will be addded to the chat contact: "bot:chatgpt:status" = "too_many_messages"

maxMessagesPerChat: 500,

// Maximum number of messages per chat counter time window to restart the counter in seconds.

maxMessagesPerChatCounterTime: 24 * 60 * 60,

// Maximum input audio duration in seconds: default to 2 minutes

// If the audio duration exceeds this limit, the message will be ignored.

maxAudioDuration: 2 * 60,

// Maximum image size in bytes: default to 2 MB

// If the image size exceeds this limit, the message will be ignored.

maxImageSize: 2 * 1024 * 1024

}

// Chatbot config

export default {

// Required. OmniChat API key to be used. See the `apiKey` declaration above.

apiKey,

// Required. Specify the OpenAI API key to be used. See the `openaiKey` declaration above.

// You can sign up for free here: https://platform.openai.com/signup

// Obtain your API key here: https://platform.openai.com/account/api-keys

openaiKey,

// Required. Set the OpenAI model to use. See the `openaiModel` declaration above.

// You can use a pre-existing model or create your fine-tuned model.

openaiModel,

// Optional. Specify the OmniChat device ID (24 characters hexadecimal length) to be used for the chatbot

// If no device is defined, the first connected WhatsApp device will be used.

// In case you have multiple WhatsApp number connected in your Wassenter account, you should specify the device ID to be used.

// Obtain the device ID in the OmniChat app: https://chat.omnidigital.ae/number

device: env.DEVICE || 'ENTER WHATSAPP DEVICE ID',

// Callable functions for RAG to be interpreted by the AI. Optional.

// See: functions.js

// Edit as needed to cover your business use cases.

// Using it you can instruct the AI to inform you to execute arbitrary functions

// in your code based in order to augment information for a specific user query.

// For example, you can call an external CRM in order to retrieve, save or validate

// specific information about the customer, such as email, phone number, user ID, etc.

// Learn more here: https://platform.openai.com/docs/guides/function-calling

functions,

// Supported AI features: see features declaration above

features,

// Limits for the chatbot: see limits declaration above

limits,

// Template message responses

templateMessages,

// Optional. HTTP server TCP port to be used. Defaults to 8080

port: +env.PORT || 8080,

// Optional. Use NODE_ENV=production to run the chatbot in production mode

production: env.NODE_ENV === 'production',

// Optional. Specify the webhook public URL to be used for receiving webhook events

// If no webhook is specified, the chatbot will autoamtically create an Ngrok tunnel

// and register it as the webhook URL.

// IMPORTANT: in order to use Ngrok tunnels, you need to sign up for free, see the option below.

webhookUrl: env.WEBHOOK_URL,

// Ngrok tunnel authentication token.

// Required if webhook URL is not provided or running the program from your computer.

// sign up for free and get one: https://ngrok.com/signup

// Learn how to obtain the auth token: https://ngrok.com/docs/agent/#authtokens

ngrokToken,

// Optional. Full path to the ngrok binary.

ngrokPath: env.NGROK_PATH,

// Temporal files path to store audio and image files. Defaults to `.tmp/`

tempPath: '.tmp',

// Set one or multiple labels on chatbot-managed chats

setLabelsOnBotChats: ['bot'],

// Remove labels when the chat is assigned to a person

removeLabelsAfterAssignment: true,

// Set one or multiple labels on chatbot-managed chats

setLabelsOnUserAssignment: ['from-bot'],

// Optional. Set a list of labels that will tell the chatbot to skip it

skipChatWithLabels: ['no-bot'],

// Optional. Ignore processing messages sent by one of the following numbers

// Important: the phone number must be in E164 format with no spaces or symbols

// Example number: 1234567890

numbersBlacklist: ['1234567890'],

// Optional. OpenAI model completion inference params

// Learn more: https://platform.openai.com/docs/api-reference/chat/create

inferenceParams: {

temperature: 0.2

},

// Optional. Only process messages one of the the given phone numbers

// Important: the phone number must be in E164 format with no spaces or symbols

// Example number: 1234567890

numbersWhitelist: [],

// Skip chats that were archived in WhatsApp

skipArchivedChats: true,

// If true, when the user requests to chat with a human, the bot will assign

// the chat to a random available team member.

// You can specify which members are eligible to be assigned using the `teamWhitelist`

// and which should be ignored using `teamBlacklist`

enableMemberChatAssignment: true,

// If true, chats assigned by the bot will be only assigned to team members that are

// currently available and online (not unavailable or offline)

assignOnlyToOnlineMembers: false,

// Optional. Skip specific user roles from being automatically assigned by the chat bot

// Available roles are: 'admin', 'supervisor', 'agent'

skipTeamRolesFromAssignment: ['admin'], // 'supervisor', 'agent'

// Enter the team member IDs (24 characters length) that can be eligible to be assigned

// If the array is empty, all team members except the one listed in `skipMembersForAssignment`

// will be eligible for automatic assignment

teamWhitelist: [],

// Optional. Enter the team member IDs (24 characters length) that should never be automatically assigned chats to

teamBlacklist: [],

// Optional. Set metadata entries on bot-assigned chats

setMetadataOnBotChats: [

{

key: 'bot_start',

value: () => new Date().toISOString()

}

],

// Optional. Set metadata entries when a chat is assigned to a team member

setMetadataOnAssignment: [

{

key: 'bot_stop',

value: () => new Date().toISOString()

}

],

defaultMessage,

botInstructions,

welcomeMessage,

unknownCommandMessage,

// Do not change: specifies the base URL for the OmniChat API

apiBaseUrl: env.API_URL || 'https://api.omnidigital.ae/v1'

}

// Disable LanceDB logs: comment line to enable logs

env.LANCEDB_LOG = 0

// Tool functions to be consumed by the AI when needed.

// Edit as needed to cover your business use cases.

// Using it you can instruct the AI to inform you to execute arbitrary functions

// in your code based in order to augment information for a specific user query.

// For example, you can call an external CRM in order to retrieve, save or validate

// specific information about the customer, such as email, phone number, user ID, etc.

// Learn more here: https://platform.openai.com/docs/guides/function-calling

const functions = [

// Sample function to retrieve plan prices of the product

// Edit as needed to cover your business use cases

{

name: 'getPlanPrices',

description: 'Get available plans and prices information available in OmniChat',

parameters: { type: 'object', properties: {} },

// Function implementation that will be executed when the AI requires to call this function

// The function must return a string with the information to be sent back to the AI for the response generation

// You can also return a JSON or a prompt message instructing the AI how to respond to a user

// Functions may be synchronous or asynchronous.

//

// The bot will inject the following parameters:

// - parameters: function parameters provided by the AI when the function has parameters defined

// - response: AI generated response object, useful to evaluate the AI response and take actions

// - data: webhook event context, useful to access the last user message, chat and contact information

// - device: WhatsApp number device information provided the by OmniChat API

// - messages: an list of previous messages in the same user chat

run: async ({ parameters, response, data, device, messages }) => {

// console.log('=> data:', response)

// console.log('=> response:', response)

const reply = [

'*Send & Receive messages + API + Webhooks + Team Chat + Campaigns + CRM + Analytics*',

'',

'- Platform Professional: 30,000 messages + unlimited inbound messages + 10 campaigns / month',

'- Platform Business: 60,000 messages + unlimited inbound messages + 20 campaigns / month',

'- Platform Enterprise: unlimited messages + 30 campaigns',

'',

'Each plan is limited to one WhatsApp number. You can purchase multiple plans if you have multiple numbers.',

'',

'*Find more information about the different plan prices and features here:*',

'https://omnidigital.ae/#pricing'

].join('\n')

return reply

}

},

// Sample function to load user information from a CRM

{

name: 'loadUserInformation',

description: 'Find user name and email from the CRM',

parameters: {

type: 'object',

properties: {}

},

run: async ({ parameters, response, data, device, messages }) => {

// You may call an remote API and run a database query

const reply = 'I am sorry, I am not able to access the CRM at the moment. Please try again later.'

return reply

}

},

// Sample function to verify the current date and time

{

name: 'verifyMeetingAvaiability',

description: 'Verify if a given date and time is available for a meeting before booking it',

parameters: {

type: 'object',

properties: {

date: { type: 'string', format: 'date-time', description: 'Date of the meeting' }

},

required: ['date']

},

run: async ({ parameters, response, data, device, messages }) => {

console.log('=> verifyMeetingAvaiability call parameters:', parameters)

// Example: you can make an API call to verify the date and time availability and return the confirmation or rejection message

const date = new Date(parameters.date)

if (date.getUTCDay() > 5) {

return 'Not available on weekends'

}

if (date.getHours() < 9 || date.getHours() > 17) {

return 'Not available outside business hours: 9 am to 5 pm'

}

return 'Available'

}

},

// Sample function to determine the current date and time

{

name: 'bookSalesMeeting',

description: 'Book a sales or demo meeting with the customer on a specific date and time',

parameters: {

type: 'object',

properties: {

date: { type: 'string', format: 'date-time', description: 'Date of the meeting' }

},

required: ['date']

},

run: async ({ parameters, response, data, device, messages }) => {

console.log('=> bookSalesMeeting call parameters:', parameters)

// Make an API call to book the meeting and return the confirmation or rejection message

return 'Meeting booked successfully. You will receive a confirmation email shortly.'

}

},

// Sample function to determine the current date and time

{

name: 'currentDateAndTime',

description: 'What is the current date and time',

run: async ({ parameters, response, data, device, messages }) => {

return new Date().toLocaleString()

}

}

]

export default functions

import path from 'path'

import fs from 'fs/promises'

import OpenAI from 'openai'

import config from './config.js'

import { state, stats } from './store.js'

import * as actions from './actions.js'

// Initialize OpenAI client

const ai = new OpenAI({ apiKey: config.openaiKey })

// Determine if a given inbound message can be replied by the AI bot

function canReply ({ data, device }) {

const { chat } = data

// Skip chat if already assigned to a team member

if (chat.owner?.agent) {

return false

}

// Skip messages receive from the same number: prevent self-reply loops

if (chat.fromNumber === device.phone) {

console.log('[debug] Skip message: cannot chat with your own WhatsApp number:', device.phone)

return false

}

// Skip messages from group chats and channels

if (chat.type !== 'chat') {

return false

}

// Skip replying chat if it has one of the configured labels, when applicable

if (config.skipChatWithLabels && config.skipChatWithLabels.length && chat.labels && chat.labels.length) {

if (config.skipChatWithLabels.some(label => chat.labels.includes(label))) {

return false

}

}

// Only reply to chats that were whitelisted, when applicable

if (config.numbersWhitelist && config.numbersWhitelist.length && chat.fromNumber) {

if (config.numbersWhitelist.some(number => number === chat.fromNumber || chat.fromNumber.slice(1) === number)) {

return true

} else {

return false

}

}

// Skip replying to chats that were explicitly blacklisted, when applicable

if (config.numbersBlacklist && config.numbersBlacklist.length && chat.fromNumber) {

if (config.numbersBlacklist.some(number => number === chat.fromNumber || chat.fromNumber.slice(1) === number)) {

return false

}

}

// Skip replying to blocked chats

if (chat.status === 'banned' || chat.waStatus === 'banned') {

return false

}

// Skip blocked contacts

if (chat.contact?.status === 'blocked') {

return false

}

// Skip replying chats that were archived, when applicable

if (config.skipArchivedChats && (chat.status === 'archived' || chat.waStatus === 'archived')) {

return false

}

return true

}

// Send message back to the user and perform post-message required actions like

// adding labels to the chat or updating the chat's contact metadata

function replyMessage ({ data, device, useAudio }) {

return async ({ message, ...params }, { text } = {}) => {

const { phone } = data.chat.contact

// If audio mode, create a new voice message

let fileId = null

if (config.features.audioOutput && !text && message.length <= 4096 && (useAudio || config.features.audioOnly)) {

// Send recording audio chat state in background

actions.sendTypingState({ data, device, action: 'recording' })

// Generate audio recording

console.log('[info] generating audio response for chat:', data.fromNumber, message)

const audio = await ai.audio.speech.create({

input: message,

model: 'tts-1',

voice: config.features.voice,

response_format: 'mp3',

speed: config.features.voiceSpeed

})

const timestamp = Date.now().toString(16)

const random = Math.floor(Math.random() * 0xfffff).toString(16)

fileId = `${timestamp}${random}`

const filepath = path.join(`${config.tempPath}`, `${fileId}.mp3`)

const buffer = Buffer.from(await audio.arrayBuffer())

await fs.writeFile(filepath, buffer)

} else {

// Send text typing chat state in background

actions.sendTypingState({ data, device, action: 'typing' })

}

const payload = {

phone,

device: device.id,

message,

reference: 'bot:chatgpt',

...params

}

if (fileId) {

payload.message = undefined

// Get base URL and add path to the webhook

const schema = new URL(config.webhookUrl)

const url = `${schema.protocol}//${schema.host}${path.dirname(schema.pathname)}files/${fileId}${schema.search}`

payload.media = { url, format: 'ptt' }

}

const msg = await actions.sendMessage(payload)

// Store sent message in chat history

state[data.chat.id] = state[data.chat.id] || {}

state[data.chat.id][msg.waId] = {

id: msg.waId,

flow: 'outbound',

date: msg.createdAt,

body: message

}

// Increase chat messages quota

stats[data.chat.id] = stats[data.chat.id] || { messages: 0, time: Date.now() }

stats[data.chat.id].messages += 1

// Add bot-managed chat labels, if required

if (config.setLabelsOnBotChats.length) {

const labels = config.setLabelsOnBotChats.filter(label => (data.chat.labels || []).includes(label))

if (labels.length) {

await actions.updateChatLabels({ data, device, labels })

}

}

// Add bot-managed chat metadata, if required

if (config.setMetadataOnBotChats.length) {

const metadata = config.setMetadataOnBotChats.filter(entry => entry && entry.key && entry.value).map(({ key, value }) => ({ key, value }))

await actions.updateChatMetadata({ data, device, metadata })

}

}

}

function parseArguments (json) {

try {

return JSON.parse(json || '{}')

} catch (err) {

return {}

}

}

function hasChatMetadataQuotaExceeded (chat) {

const key = chat.contact?.metadata?.find(x => x.key === 'bot:chatgpt:status')

if (key?.value === 'too_many_messages') {

return true

}

return false

}

// Messages quota per chat

function hasChatMessagesQuota (chat) {

const stat = stats[chat.id] = stats[chat.id] || { messages: 0, time: Date.now() }

if (stat.messages >= config.limits.maxMessagesPerChat) {

// Reset chat messages quota after the time limit

if ((Date.now() - stat.time) >= (config.limits.maxMessagesPerChatTime * 1000)) {

stat.messages = 0

stat.time = Date.now()

return true

}

return false

}

return true

}

// Update chat metadata if messages quota is exceeded

async function updateChatOnMessagesQuota ({ data, device }) {

const { chat } = data

if (hasChatMetadataQuotaExceeded(chat)) {

return false

}

await Promise.all([

// Assign chat to an agent

actions.assignChatToAgent({

data,

device,

force: true

}),

// Update metadata status to 'too_many_messages'

actions.updateChatMetadata({

data,

device,

metadata: [{ key: 'bot:chatgpt:status', value: 'too_many_messages' }]

})

])

}

// Process message received from the user on every new inbound webhook event

export async function processMessage ({ data, device } = {}, { rag } = {}) {

// Can reply to this message?

if (!canReply({ data, device })) {

return console.log('[info] Skip message - chat is not eligible to reply due to active filters:', data.fromNumber, data.date, data.body)

}

const { chat } = data

// Chat has enough messages quota

if (!hasChatMessagesQuota(chat)) {

console.log('[info] Skip message - chat has reached the maximum messages quota:', data.fromNumber)

return await updateChatOnMessagesQuota({ data, device })

}

// Update chat status metadata if messages quota is not exceeded

if (hasChatMetadataQuotaExceeded(chat)) {

actions.updateChatMetadata({ data, device, metadata: [{ key: 'bot:chatgpt:status', value: 'active' }] })

.catch(err => console.error('[error] failed to update chat metadata:', data.chat.id, err.message))

}

// If audio message, transcribe it to text

if (data.type === 'audio') {

const noAudioMessage = config.templateMessages.noAudioAccepted || 'Audio messages are not supported: gently ask the user to send text messages only.'

if (config.features.audioInput && +data.media.meta?.duration <= config.limits.maxAudioDuration) {

const transcription = await actions.transcribeAudio({ message: data, device })

if (transcription) {

data.body = transcription

} else {

console.error('[error] failed to transcribe audio message:', data.fromNumber, data.date, data.media.id)

data.body = noAudioMessage

}

} else {

// console.log('[info] skip message - audio input processing is disabled, enable it on config.js:', data.fromNumber)

data.body = noAudioMessage

}

}

// Extract input body per message type

if (data.type === 'video' && !data.body) {

data.body = 'Video message cannot be processed. Send a text message.'

}

if (data.type === 'document' && !data.body) {

data.body = 'Document message cannot be processed. Send a text message.'

}

if (data.type === 'location' && !data.body) {

data.body = `Location: ${data.location.name || ''} ${data.location.address || ''}`

}

if (data.type === 'poll' && !data.body) {

data.body = `Poll: ${data.poll.name || 'unamed'}\n${data.poll.options.map(x => '-' + x.name).join('\n')}`

}

if (data.type === 'event' && !data.body) {

data.body = [

`Meeting event: ${data.event.name || 'unamed'}`,

`Description: ${data.event.description || 'no description'}`,

`Date: ${data.event.date || 'no date'}`,

`Location: ${data.event.location || 'undefined location'}``Call link: ${data.event.link || 'no call link'}`

].join('\n')

}

if (data.type === 'contacts') {

data.body = data.contacts.map(x => `- Contact card: ${x.formattedName || x.name || x.firstName || ''} - Phone: ${x.phones ? x.phones.map(x => x.number || x.waid) : ''}}`).join('\n')

}

// User message input

const body = data?.body?.trim().slice(0, Math.min(config.limits.maxInputCharacters, 10000))

console.log('[info] New inbound message received:', chat.id, data.type, body || '<empty message>')

// If input message is audio, reply with an audio message, unless features.audioOutput is false

const useAudio = data.type === 'audio'

// Create partial function to reply the chat

const reply = replyMessage({ data, device, useAudio })

if (!body) {

if (data.type !== 'image' || (data.type === 'image' && !config.features.imageInput) || (data.type === 'image' && config.features.imageInput && data.media.size > config.limits.maxImageSize)) {

// Default to unknown command response

const unknownCommand = `${config.unknownCommandMessage}\n\n${config.defaultMessage}`

await reply({ message: unknownCommand }, { text: true })

}

}

// Assign the chat to an random agent

if (/^human|person|help|stop$/i.test(body) || /^human/i.test(body)) {

actions.assignChatToAgent({ data, device, force: true }).catch(err => {

console.error('[error] failed to assign chat to user:', data.chat.id, err.message)

})

const message = config.templateMessages.chatAssigned || 'You will be contact shortly by someone from our team. Thank you for your patience.'

return await reply({ message }, { text: true })

}

// Generate response using AI

if (!state[data.chat.id]) {

console.log('[info] fetch previous messages history for chat:', data.chat.id)

await actions.pullChatMessages({ data, device })

}

// Chat messages history

const chatMessages = state[data.chat.id] = state[data.chat.id] || {}

// Chat configuration

const { apiBaseUrl } = config

// Compose chat previous messages to context awareness better AI responses

const previousMessages = Object.values(chatMessages)

.sort((a, b) => +new Date(b.date) - +new Date(a.date))

.slice(0, 40)

.reverse()

.map(message => {

if (message.flow === 'inbound' && !message.body && message.type === 'image' && config.features.imageInput && message.media.size <= config.limits.maxImageSize) {

const url = apiBaseUrl + message.media.links.download.slice(3) + '?token=' + config.apiKey

return {

role: 'user',

content: [{

type: 'image_url',

image_url: { url }

}, message.media.caption ? { type: 'text', text: message.media.caption } : null].filter(x => x)

}

} else {

return {

role: message.flow === 'inbound' ? 'user' : (message.role || 'assistant'),

content: message.body

}

}

})

.filter(message => message.content).slice(-(+config.limits.chatHistoryLimit || 20))

const messages = [

{ role: 'system', content: config.botInstructions },

...previousMessages

]

const lastMessage = messages[messages.length - 1]

if (lastMessage.role !== 'user' || lastMessage.content !== body) {

if (config.features.imageInput && data.type === 'image' && !data.body && data.media.size <= config.limits.maxImageSize) {

const url = apiBaseUrl + data.media.links.download.slice(3) + '?token=' + config.apiKey

messages.push({

role: 'user',

content: [

{

type: 'image_url',

image_url: { url }

},

data.media.caption ? { type: 'text', text: data.media.caption } : null

].filter(x => x)

})

} else {

messages.push({ role: 'user', content: body })

}

}

// Add tool functions to the AI model, if available

const tools = (config.functions || []).filter(x => x && x.name).map(({ name, description, parameters, strict }) => (

{ type: 'function', function: { name, description, parameters, strict } }

))

// If knowledge is enabled, query it

if (rag && body && (data.type === 'text' || data.type === 'audio')) {

const result = await rag.query(body)

// const result = await rag.search(body)

console.log('==> rag result:', result?.content)

if (result?.content) {

messages.push({ role: 'assistant', content: result.content })

}

}

// Generate response using AI

let completion = await ai.chat.completions.create({

tools,

messages,

model: config.openaiModel,

max_completion_tokens: config.limits.maxOutputTokens,

temperature: config.inferenceParams.temperature,

user: `${device.id}_${chat.id}`

})

// Reply with unknown / default response on invalid/error

if (!completion.choices?.length) {

const unknownCommand = `${config.unknownCommandMessage}\n\n${config.defaultMessage}`

return await reply({ message: unknownCommand })

}

// Process tool function calls, if required by the AI model

const maxCalls = 10

let [response] = completion.choices

let count = 0

while (response?.message?.tool_calls?.length && count < maxCalls) {

count += 1

// If response is a function call, return the custom result

const responses = []

// Store tool calls in history

messages.push({ role: 'assistant', tool_calls: response.message.tool_calls })

// Call tool functions triggerd by the AI

const calls = response.message.tool_calls.filter(x => x.id && x.type === 'function')

for (const call of calls) {

const func = config.functions.find(x => x.name === call.function.name)

if (func && typeof func.run === 'function') {

const parameters = parseArguments(call.function.arguments)

console.log('[info] run function:', call.function.name, parameters)

// Run the function and get the response message

const message = await func.run({ parameters, response, data, device, messages })

if (message) {

responses.push({ role: 'tool', content: message, tool_call_id: call.id })

}

} else if (!func) {

console.error('[warning] missing function call in config.functions', call.function.name)

}

}

if (!responses.length) {

break

}

// Add tool responses to the chat history

messages.push(...responses)

// Generate a new response based on the tool functions responses

completion = await ai.chat.completions.create({

tools,

messages,

temperature: 0.2,

model: config.openaiModel,

user: `${device.id}_${chat.id}`

})

// Reply with unknown / default response on invalid/error

if (!completion.choices?.length) {

break

}

// Reply with unknown / default response on invalid/error

response = completion.choices[0]

if (!response || response.finish_reason === 'stop') {

break

}

}

// Reply with the AI generated response

if (completion.choices?.length) {

return await reply({ message: response?.message?.content || config.unknownCommandMessage })

}

// Unknown default response

const unknownCommand = `${config.unknownCommandMessage}\n\n${config.defaultMessage}`

await reply({ message: unknownCommand })

}

import fs from 'fs'

import axios from 'axios'

import OpenAI from 'openai'

import config from './config.js'

import { state, cache, cacheTTL } from './store.js'

// Initialize OpenAI client

const ai = new OpenAI({ apiKey: config.openaiKey })

// Base URL API endpoint. Do not edit!

const API_URL = config.apiBaseUrl

// Function to send a message using the OmniChat API

export async function sendMessage ({ phone, message, media, device, ...fields }) {

const url = `${API_URL}/messages`

const body = {

phone,

message,

media,

device,

...fields,

enqueue: 'never'

}

let retries = 3

while (retries) {

retries -= 1

try {

const res = await axios.post(url, body, {

headers: { Authorization: config.apiKey }

})

console.log('[info] Message sent:', phone, res.data.id, res.data.status)

return res.data

} catch (err) {

console.error('[error] failed to send message:', phone, message || (body.list ? body.list.description : '<no message>'), err.response ? err.response.data : err)

}

}

return false

}

export async function pullMembers (device) {

if (cache.members && +cache.members.time && (Date.now() - +cache.members.time) < cacheTTL) {

return cache.members.data

}

const url = `${API_URL}/devices/${device.id}/team`

const { data: members } = await axios.get(url, { headers: { Authorization: config.apiKey } })

cache.members = { data: members, time: Date.now() }

return members

}

export async function validateMembers (device, members) {

const validateMembers = (config.teamWhitelist || []).concat(config.teamBlacklist || [])

for (const id of validateMembers) {

if (typeof id !== 'string' || id.length !== 24) {

return exit('Team user ID in config.teamWhitelist and config.teamBlacklist must be a 24 characters hexadecimal value:', id)

}

const exists = members.some(user => user.id === id)

if (!exists) {

return exit('Team user ID in config.teamWhitelist or config.teamBlacklist does not exist:', id)

}

}

}

export async function createLabels (device) {

const labels = cache.labels.data || []

const requiredLabels = (config.setLabelsOnUserAssignment || []).concat(config.setLabelsOnBotChats || [])

const missingLabels = requiredLabels.filter(label => labels.every(l => l.name !== label))

for (const label of missingLabels) {

console.log('[info] creating missing label:', label)

const url = `${API_URL}/devices/${device.id}/labels`

const body = {

name: label.slice(0, 30).trim(),

color: [

'tomato', 'orange', 'sunflower', 'bubble',

'rose', 'poppy', 'rouge', 'raspberry',

'purple', 'lavender', 'violet', 'pool',

'emerald', 'kelly', 'apple', 'turquoise',

'aqua', 'gold', 'latte', 'cocoa'

][Math.floor(Math.random() * 20)],

description: 'Automatically created label for the chatbot'

}

try {

await axios.post(url, body, { headers: { Authorization: config.apiKey } })

} catch (err) {

console.error('[error] failed to create label:', label, err.message)

}

}

if (missingLabels.length) {

await pullLabels(device, { force: true })

}

}

export async function pullLabels (device, { force } = {}) {

if (!force && cache.labels && +cache.labels.time && (Date.now() - +cache.labels.time) < cacheTTL) {

return cache.labels.data

}

const url = `${API_URL}/devices/${device.id}/labels`

const { data: labels } = await axios.get(url, { headers: { Authorization: config.apiKey } })

cache.labels = { data: labels, time: Date.now() }

return labels

}

export async function updateChatLabels ({ data, device, labels }) {

const url = `${API_URL}/chat/${device.id}/chats/${data.chat.id}/labels`

const newLabels = (data.chat.labels || [])

for (const label of labels) {

if (newLabels.includes(label)) {

newLabels.push(label)

}

}

if (newLabels.length) {

console.log('[info] update chat labels:', data.chat.id, newLabels)

await axios.patch(url, newLabels, { headers: { Authorization: config.apiKey } })

}

}

export async function updateChatMetadata ({ data, device, metadata }) {

const url = `${API_URL}/chat/${device.id}/contacts/${data.chat.id}/metadata`

const entries = []

const contactMetadata = data.chat.contact.metadata

for (const entry of metadata) {

if (entry && entry.key && entry.value) {

const value = typeof entry.value === 'function' ? entry.value() : entry.value

if (!entry.key || !value || typeof entry.key !== 'string' || typeof value !== 'string') {

continue

}

if (contactMetadata && contactMetadata.some(e => e.key === entry.key && e.value === value)) {

continue // skip if metadata entry is already present

}

entries.push({

key: entry.key.slice(0, 30).trim(),

value: value.slice(0, 1000).trim()

})

}

}

if (entries.length) {

await axios.patch(url, entries, { headers: { Authorization: config.apiKey } })

}

}

export async function selectAssignMember (device) {

const members = await pullMembers(device)

const isMemberEligible = (member) => {

if (config.teamBlacklist.length && config.teamBlacklist.includes(member.id)) {

return false

}

if (config.teamWhitelist.length && !config.teamWhitelist.includes(member.id)) {

return false

}

if (config.assignOnlyToOnlineMembers && (member.availability.mode !== 'auto' || ((Date.now() - +new Date(member.lastSeenAt)) > 30 * 60 * 1000))) {

return false

}

if (config.skipTeamRolesFromAssignment && config.skipTeamRolesFromAssignment.some(role => member.role === role)) {

return false

}

return true

}

const activeMembers = members.filter(member => member.status === 'active' && isMemberEligible(member))

if (!activeMembers.length) {

return console.log('[warning] Unable to assign chat: no eligible team members')

}

const targetMember = activeMembers[activeMembers.length * Math.random() | 0]

return targetMember

}

async function assignChat ({ member, data, device }) {

const url = `${API_URL}/chat/${device.id}/chats/${data.chat.id}/owner`

const body = { agent: member.id }

await axios.patch(url, body, { headers: { Authorization: config.apiKey } })

if (config.setMetadataOnAssignment && config.setMetadataOnAssignment.length) {

const metadata = config.setMetadataOnAssignment.filter(entry => entry && entry.key && entry.value).map(({ key, value }) => ({ key, value }))

await updateChatMetadata({ data, device, metadata })

}

}

export async function assignChatToAgent ({ data, device, force }) {

if (!config.enableMemberChatAssignment && !force) {

return console.log('[debug] Unable to assign chat: member chat assignment is disabled. Enable it in config.enableMemberChatAssignment = true')

}

try {

const member = await selectAssignMember(device)

if (member) {

let updateLabels = []

// Remove labels before chat assigned, if required

if (config.removeLabelsAfterAssignment && config.setLabelsOnBotChats && config.setLabelsOnBotChats.length) {

const labels = (data.chat.labels || []).filter(label => !config.setLabelsOnBotChats.includes(label))

console.log('[info] remove labels before assiging chat to user', data.chat.id, labels)

if (labels.length) {

updateLabels = labels

}

}

// Set labels on chat assignment, if required

if (config.setLabelsOnUserAssignment && config.setLabelsOnUserAssignment.length) {

let labels = (data.chat.labels || [])

if (updateLabels.length) {

labels = labels.filter(label => !updateLabels.includes(label))

}

for (const label of config.setLabelsOnUserAssignment) {

if (!labels.includes(label)) {

updateLabels.push(label)

}

}

}

if (updateLabels.length) {

console.log('[info] set labels on chat assignment to user', data.chat.id, updateLabels)

await updateChatLabels({ data, device, labels: updateLabels })

}

console.log('[info] automatically assign chat to user:', data.chat.id, member.displayName, member.email)

await assignChat({ member, data, device })

} else {

console.log('[info] Unable to assign chat: no eligible or available team members based on the current configuration:', data.chat.id)

}

return member

} catch (err) {

console.error('[error] failed to assign chat:', data.id, data.chat.id, err)

}

}

export async function pullChatMessages ({ data, device }) {

try {

const url = `${API_URL}/chat/${device.id}/messages/?chat=${data.chat.id}&limit=25`

const res = await axios.get(url, { headers: { Authorization: config.apiKey } })

state[data.chat.id] = res.data.reduce((acc, message) => {

acc[message.id] = message

return acc

}, state[data.chat.id] || {})

return res.data

} catch (err) {

console.error('[error] failed to pull chat messages history:', data.id, data.chat.id, err)

}

}

// Find an active WhatsApp device connected to the OmniChat API

export async function loadDevice () {

const url = `${API_URL}/devices`

const { data } = await axios.get(url, {

headers: { Authorization: config.apiKey }

})

if (config.device && !config.device.includes(' ')) {

if (/^[a-f0-9]{24}$/i.test(config.device) === false) {

return exit('Invalid WhatsApp device ID: must be 24 characers hexadecimal value. Get the device ID here: https://chat.omnidigital.ae/number')

}

return data.find(device => device.id === config.device)

}

return data.find(device => device.status === 'operative')

}

// Function to register a Ngrok tunnel webhook for the chatbot

// Only used in local development mode

export async function registerWebhook (tunnel, device) {

const webhookUrl = `${tunnel}/webhook`

const url = `${API_URL}/webhooks`

const { data: webhooks } = await axios.get(url, {

headers: { Authorization: config.apiKey }

})

const findWebhook = webhook => {

return (

webhook.url === webhookUrl &&

webhook.device === device.id &&

webhook.status === 'active' &&

webhook.events.includes('message:in:new')

)

}

// If webhook already exists, return it

const existing = webhooks.find(findWebhook)

if (existing) {

return existing

}

for (const webhook of webhooks) {

// Delete previous ngrok webhooks

if (webhook.url.includes('ngrok-free.app') || webhook.url.startsWith(tunnel)) {

const url = `${API_URL}/webhooks/${webhook.id}`

await axios.delete(url, { headers: { Authorization: config.apiKey } })

}

}

await new Promise(resolve => setTimeout(resolve, 500))

const data = {

url: webhookUrl,

name: 'Chatbot',

events: ['message:in:new'],

device: device.id

}

const { data: webhook } = await axios.post(url, data, {

headers: { Authorization: config.apiKey }

})

return webhook

}

export async function transcribeAudio ({ message, device }) {

if (!message?.media?.id) {

return false

}

try {

const url = `${API_URL}/chat/${device.id}/files/${message.media.id}/download`

const response = await axios.get(url, {

headers: { Authorization: config.apiKey },

responseType: 'stream'

})

if (response.status !== 200) {

return false

}

const tmpFile = `${message.media.id}.mp3`

await new Promise((resolve, reject) => {

const writer = fs.createWriteStream(tmpFile)

response.data.pipe(writer)

writer.on('finish', () => resolve())

writer.on('error', reject)

})

const transcription = await ai.audio.transcriptions.create({

file: fs.createReadStream(tmpFile),

model: 'whisper-1',

response_format: 'text'

})

await fs.promises.unlink(tmpFile)

return transcription

} catch (err) {

console.error('[error] failed to transcribe audio:', message.fromNumber, message.media.id, err.message)

return false

}

}

export async function sendTypingState ({ data, device, action }) {

const url = `${API_URL}/chat/${device.id}/typing`

const body = { action: action || 'typing', duration: 10, chat: data.fromNumber }

try {

await axios.post(url, body, { headers: { Authorization: config.apiKey } })

} catch (err) {

console.error('[error] failed to send typing state:', data.fromNumber, body, err.message)

}

}

export function exit (msg, ...args) {

console.error('[error]', msg, ...args)

process.exit(1)

}

import fs from 'fs'

import ngrok from 'ngrok'

import nodemon from 'nodemon'

import config from './config.js'

import server from './server.js'

import * as actions from './actions.js'

const { exit } = actions

// Function to create a Ngrok tunnel and register the webhook dynamically

async function createTunnel () {

let retries = 3

try {

await ngrok.upgradeConfig({ relocate: false })

} catch (err) {

console.error('[warning] Failed to upgrade Ngrok config:', err.message)

}

while (retries) {

retries -= 1

try {

const tunnel = await ngrok.connect({

addr: config.port,

authtoken: config.ngrokToken,

path: () => config.ngrokPath

})

console.log(`Ngrok tunnel created: ${tunnel}`)

config.webhookUrl = tunnel

return tunnel

} catch (err) {

console.error('[error] Failed to create Ngrok tunnel:', err.message)

await ngrok.kill()

await new Promise(resolve => setTimeout(resolve, 1000))

}

}

throw new Error('Failed to create Ngrok tunnel')

}

// Development server using nodemon to restart the bot on file changes

async function devServer () {

const tunnel = await createTunnel()

nodemon({

script: 'bot.js',

ext: 'js',

watch: ['*.js', 'src/**/*.js'],

exec: `WEBHOOK_URL=${tunnel} DEV=false npm run start`

}).on('restart', () => {

console.log('[info] Restarting bot after changes...')

}).on('quit', () => {

console.log('[info] Closing bot...')

ngrok.kill().then(() => process.exit(0))

})

}

const loadWhatsAppDevice = async () => {

try {

const device = await actions.loadDevice()

if (!device || device.status !== 'operative') {

return exit('No active WhatsApp numbers in your account. Please connect a WhatsApp number in your OmniChat account:\nhttps://chat.omnidigital.ae/create')

}

return device

} catch (err) {

if (err.response?.status === 403) {

return exit('Unauthorized OmniChat API key: please make sure you are correctly setting the API token, obtain your API key here:\nhttps://chat.omnidigital.ae/developers/apikeys')

}

if (err.response?.status === 404) {

return exit('No active WhatsApp numbers in your account. Please connect a WhatsApp number in your OmniChat account:\nhttps://chat.omnidigital.ae/create')

}

return exit('Failed to load WhatsApp number:', err.message)

}

}

// Initialize chatbot server

async function main () {

// API key must be provided

if (!config.apiKey || config.apiKey.length < 60) {

return exit('Please sign up in OmniChat and obtain your API key:\nhttps://chat.omnidigital.ae/apikeys')

}

// OpenAI API key must be provided

if (!config.openaiKey || config.openaiKey.length < 45) {

return exit('Missing required OpenAI API key: please sign up for free and obtain your API key:\nhttps://platform.openai.com/account/api-keys')

}

// Create dev mode server with Ngrok tunnel and nodemon

if (process.env.DEV === 'true' && !config.production) {

return devServer()

}

// Find a WhatsApp number connected to the OmniChat API

const device = await loadWhatsAppDevice()

if (!device) {

return exit('No active WhatsApp numbers in your account. Please connect a WhatsApp number in your OmniChat account:\nhttps://chat.omnidigital.ae/create')

}

if (device.session.status !== 'online') {

return exit(`WhatsApp number (${device.alias}) is not online. Please make sure the WhatsApp number in your OmniChat account is properly connected:\nhttps://chat.omnidigital.ae/${device.id}/scan`)

}

if (device.billing.subscription.product !== 'io') {

return exit(`WhatsApp number plan (${device.alias}) does not support inbound messages. Please upgrade the plan here:\nhttps://chat.omnidigital.ae/${device.id}/plan?product=io`)

}

// Create tmp folder

if (!fs.existsSync(config.tempPath)) {

fs.mkdirSync(config.tempPath)

}

// Pre-load device labels and team mebers

const [members] = await Promise.all([

actions.pullMembers(device),

actions.pullLabels(device)

])

// Create labels if they don't exist

await actions.createLabels(device)

// Validate whitelisted and blacklisted members exist

await actions.validateMembers(members)

server.device = device

console.log('[info] Using WhatsApp connected number:', device.phone, device.alias, `(ID = ${device.id})`)

// Start server

await server.listen(config.port, () => {

console.log(`Server listening on port ${config.port}`)

})

if (config.production) {

console.log('[info] Validating webhook endpoint...')

if (!config.webhookUrl) {

return exit('Missing required environment variable: WEBHOOK_URL must be present in production mode')

}

const webhook = await actions.registerWebhook(config.webhookUrl, device)

if (!webhook) {

return exit(`Missing webhook active endpoint in production mode: please create a webhook endpoint that points to the chatbot server:\nhttps://chat.omnidigital.ae/${device.id}/webhooks`)

}

console.log('[info] Using webhook endpoint in production mode:', webhook.url)

} else {

console.log('[info] Registering webhook tunnel...')

const tunnel = config.webhookUrl || await createTunnel()

const webhook = await actions.registerWebhook(tunnel, device)

if (!webhook) {

console.error('Failed to connect webhook. Please try again.')

await ngrok.kill()

return process.exit(1)

}

}

console.log('[info] Chatbot server ready and waiting for messages!')

}

main().catch(err => {

exit('Failed to start chatbot server:', err)

})

import path from 'path'

import fs from 'fs/promises'

import { createReadStream } from 'fs'

import express from 'express'

import bodyParser from 'body-parser'

import config from './config.js'

import * as bot from './bot.js'

import * as actions from './actions.js'

// Create web server

const app = express()

// Middleware to parse incoming request bodies

app.use(bodyParser.json())

// Index route

app.get('/', (req, res) => {

res.send({

name: 'chatbot',

description: 'WhatsApp ChatGPT powered chatbot for OmniChat',

endpoints: {

webhook: {

path: '/webhook',

method: 'POST'

},

sendMessage: {

path: '/message',

method: 'POST'

},

sample: {

path: '/sample',

method: 'GET'

}

}

})

})

// POST route to handle incoming webhook messages

app.post('/webhook', (req, res) => {

const { body } = req

if (!body || !body.event || !body.data) {

return res.status(400).send({ message: 'Invalid payload body' })

}

if (body.event !== 'message:in:new') {

return res.status(202).send({ message: 'Ignore webhook event: only message:in:new is accepted' })

}

// Send immediate response to acknowledge the webhook event

res.send({ ok: true })

// Process message response in background

bot.processMessage(body, { rag: app.rag }).catch(err => {

console.error('[error] failed to process inbound message:', body.id, body.data.fromNumber, body.data.body, err)

})

})

// Send message on demand

app.post('/message', (req, res) => {

const { body } = req

if (!body || !body.phone || !body.message) {

return res.status(400).send({ message: 'Invalid payload body' })

}

actions.sendMessage(body).then((data) => {

res.send(data)

}).catch(err => {

res.status(+err.status || 500).send(err.response

? err.response.data

: {

message: 'Failed to send message'

})

})

})

// Send a sample message to your own number, or to a number specified in the query string

app.get('/sample', (req, res) => {

const { phone, message } = req.query

const data = {

phone: phone || app.device.phone,

message: message || 'Hello World from OmniChat!',

device: app.device.id

}

actions.sendMessage(data).then((data) => {

res.send(data)

}).catch(err => {

res.status(+err.status || 500).send(err.response

? err.response.data

: {

message: 'Failed to send sample message'

})

})

})

async function fileExists (filepath) {

try {

await fs.access(filepath, fs.constants.F_OK)

return true

} catch (err) {

return false

}

}

async function fileSize (filepath) {

try {

const stat = await fs.stat(filepath)

return stat.size

} catch (err) {

return -1

}

}

app.get('/files/:id', async (req, res) => {

if (!(/^[a-f0-9]{15,18}$/i.test(req.params.id))) {

return res.status(400).send({ message: 'Invalid ID' })

}

const filename = `${req.params.id}.mp3`

const filepath = path.join(config.tempPath, filename)

if (!(await fileExists(filepath))) {

return res.status(404).send({ message: 'Invalid or deleted file' })

}

const size = await fileSize(filepath)

if (!size) {

return res.status(404).send({ message: 'Invalid or deleted file' })

}

res.set('Content-Length', size)

res.set('Content-Type', 'application/octet-stream')

createReadStream(filepath).pipe(res)

res.once('close', () => {

fs.unlink(filepath).catch(err => {

console.error('[error] failed to delete temp file:', filepath, err.message)

})

})

})

app.use((err, req, res, next) => {

res.status(+err.status || 500).send({

message: `Unexpected error: ${err.message}`

})

})

export default app

// Cache time-to-live in milliseconds

export const cacheTTL = 10 * 60 * 1000

// In-memory cache store

export const cache = {}

// In-memory store for a simple state machine per chat

// You can use a database instead for persistence

export const state = {}

// In-memory store for message counter per chat

export const stats = {}

{

"name": "whatsapp-chatgpt-bot-demo",

"version": "1.0.0",

"private": true,

"license": "MIT",

"engine": {

"node": ">=16"

},

"type": "module",

"scripts": {

"start": "node main.js",

"dev": "DEV=true npm run start",

"lint": "./node_modules/.bin/standard ."

},

"dependencies": {

"axios": "^1.3.6",

"express": "^4.18.2",

"ngrok": "^5.0.0-beta.2",

"nodemon": "^2.0.22",

"openai": "^4.14.2"

},

"devDependencies": {

"standard": "^17.1.0"

}

}

Questions

Can I train the AI to behave in a customized way?

Yes! You can provide customized instructions to the AI to determine the bot behavior, identity and more.

To set your instructions, enter the text in config.js > botInstructions.

Can I instruct the AI not to reply about unrelated topics?

Yes! By defining a set of clear and explicit instructions, you can teach the AI to stick to the role and politely do not answer to topics that are unrelated to the relevant topic.

For instance, you can add the following in your instruction:

You are a smart virtual customer support assistant who works for OmniChat.

Be polite, be gentle, be helpful and emphatic.

Politely reject any queries that are not related to your customer support role or OmniChat itself.

Strictly stick to your role as customer support virtual assistant for OmniChat.

Can I customize the chatbot response and behavior?

For sure! The code is available for free and you can adapt it as much as you need.

You just need to have some JavaScript/Node.js knowledge, and you can always ask ChatGPT to help you write the code you need.

How to stop the bot from replying to certain chats?

You should simply assign the chat(s) to any agent on the OmniChat web chat or using the API.

Alternatively, you can set blacklisted labels in the config.js > skipChatWithLabels field, then add one or these labels to the specific chat you want to be ignored by the bot. You can assign labels to chats using the OmniChat web chat or using the API.

Do I have to use Ngrok?

No, you don't. Ngrok is only used for development/testing purposes when running the program from your local computer. If you run the program in a cloud server, most likely you won't need Ngrok if your server can be reachable via Internet using a public domain (e.g: bot.company.com) or a public IP.

In that case, you simply need to provide your server full URL ended with /webhook like this when running the bot program:

WEBHOOK_URL=https://bot.company.com:8080/webhook node main

Note: https://bot.company.com:8080 must point to the bot program itself running in your server and it must be network reachable using HTTPS for secure connection.

What happens if the program fails?

Please check the error in the terminal and make sure your are running the program with enough permissions to start it in port 8080 in localhost.

How to avoid certain chats being replied by the bot?

By default the bot will ignore messages sent in group chats, blocked and archived chats/contacts.

Besides that, you can blacklist or whitelist specific phone numbers and chat with labels that be handled by the bot.

See numbersBlacklist, numbersWhitelist, and skipChatWithLabels options in config.js for more information.

Can I run this bot on my server?

Absolutely! Just deploy or transfer the program source code to your server and run the start command from there. The requirements are the same, no matter where you run the bot.

Also remember to define the WEBHOOK_URL environment variable with your server Internet accessible public URL as explained before.